Whether these two shared popcorn at the same screening of Terminator 4 is unknown, but the Pentagon is unimpressed. A memo released by Undersecretary of Defense Frank Kendall calls for a new study to:

“identify the science, engineering, and policy problems that must be solved to permit greater operational use of autonomy across all war-fighting domains…Emphasis will be given to exploration of the bounds – both technological and social – that limit the use of autonomy across a wide range of military operations.”

More to the point, a Defense Department official summarized the study as developing “a real roadmap for autonomy.”

Fiction, Science Fiction, and the Roomba Vacuum in Your Closet

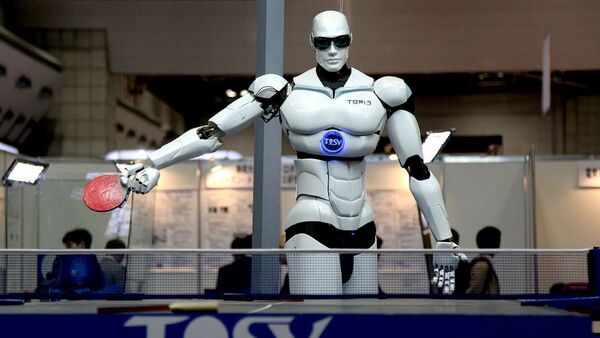

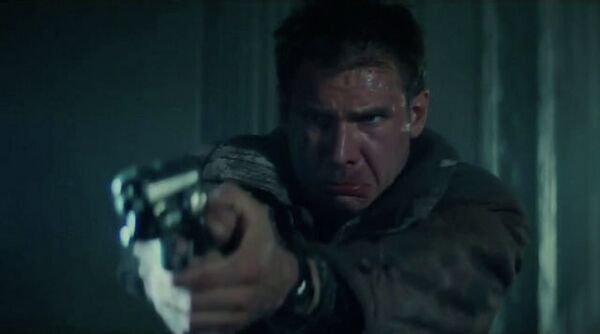

Artificial intelligence, put simply, is a complex computer system designed to mimic the human brain’s ability to process visual perceptions, speech recognition, and problem solving, among other things. Think HAL 9000 from “2001: A Space Odyssey.” Think the runaway replicants from “Blade Runner.” Think Wall-E from, well, you know.

But also think about less advanced examples. Siri, Apple’s cellphone assistant program, is a much less threatening form of artificial intelligence, one barely capable of finding a Quiznos in midtown. There’s also Watson, the IBM program designed to answer questions posed in a natural language, which competed in – and won – a much publicized “Jeopardy” tournament.

The weaknesses of these real A.I.s demonstrate just how difficult development can be. Watson required a roomful of large computer servers in order to store and process data, and to interpret colloquial human speech.

These are, for all intents and purposes, single-function devices far from achieving the multi-function capacity the human brain is capable of. A roomba vacuum is great for cleaning crumbs, but it won’t – can’t – eat you in your sleep.

Ray Kurzweil, Google director of engineering, posits that true artificial intelligence would require a complete reverse-engineering of the human brain, something he doesn’t see happening until at least 2030.

No Boots on the Ground

The United States military already utilizes several types of artificial intelligence. The F-35 fighter jet features some of the most advanced autopilot functions in aircraft history. Earlier this year, DARPA, the Defense Advanced Research Projects Agency, began work on the Aircrew Labor In-Cockpit Automation System, which aims to “reduce pilot workload.”

The use of unmanned aerial vehicles, or UAVs, or even more colloquially, drones, has been well documented throughout the wars in Iraq and Afghanistan. Stronger artificial intelligence would allow these aircraft to become even more autonomous.

The Pentagon is also worried about the increased possibility of cyberwarfare. The more contact UAVs have with operators on the ground, the greater the opportunities for hostile agents to hack into its system. Greater autonomy could shield military machines from the kind of attacks launched against Sony Pictures in December.

“Communication with drones can be jammed…that creates a push for more autonomy in the weapon,” says technologist Ramez Naam. “We will see a vast increase in how many of our weapons will be automated in some way.”

While many may worry that greater autonomy could mean less culpability in the event of wrongful deaths, many Pentagon experts point to a 2012 Defense Department directive which forbids the automation of a vehicle’s “lethal effects.”

The directive bans the use of unmanned systems to “select and engage individual targets or specific target groups that have not been previously selected by an authorized human operator.”

No boots on the ground doesn’t mean no brains in the sky.

Whether it will actually take another 15 years to achieve the kind of artificial intelligence that Kurzweil envisions is hard to say. Even given how mind-blowing many existing technologies can be, we’re still several advancements away from the kind of apocalyptic end depicted in Hollywood.

For better or worse, the Pentagon is looking to close the gap.