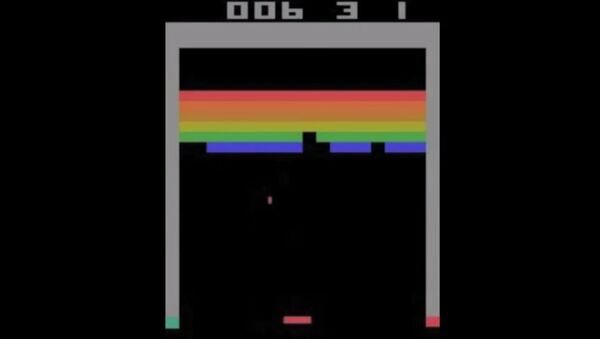

The “Deep-Q Network” computer, created by the British tech firm DeepMind that Google acquired last year, learned to play 49 different old-school Atari games, like Space Invaders and Breakout, and was able to come up with its own strategies to attain victory.

“The ultimate goal is to build smart, general-purpose [learning] machines. We’re many decades off from doing that," said artificial intelligence researcher and DeepMind founder Demis Hassabis, who coauthored a study published this week in the journal Nature. "But I do think this is the first significant rung of the ladder that we’re on."

“With Deep Blue, it was team of programmers and grand masters that distilled the knowledge into a program,” said Hassabis. “We’ve built algorithms that learn from the ground up.”

According to the researchers, the algorithm is based on the human biological neural network, in that it allows for complex and multidimensional tasks that require complex thought and abstractions. This means the machine can “learn” by trial and error.

“A bit like a baby opening their eyes and seeing the world for the first time,” said Hassabis.

Though the computer could successfully master games like “Alien Invaders,” there are still some limitations to its capabilities. It performed rather poorly at “Montezuma’s Revenge” and “Ms. Pacman,” which require more abstractions, according to coauthor Volodymyr Mnih.

Right now, the machine’s intelligence equates to that of a toddler.

“It’s mastering and understanding the construction of these games, but we wouldn’t say yet that it’s building conceptual knowledge, or abstract knowledge," said Hassabis.