On Friday, the last day of the CCW conference, one of the conference speakers told Sputnik:

“There are many complicated prohibitions and restrictions on the use of certain weapon technologies, but there is no rule specifically dealing with autonomy in attack. However, if you are taking the human being out of attack decision making, then when a state is undertaking one of these weapons reviews, the question the reviewing state will need to pose is whether this system is capable of being used in compliance with targeting law.”

The speaker underscored that “killer robots” are bound to fail the state weapon review required by article 36 of Additional Protocol I:

“What I was trying to explain to them in the CCW Conference is that the targeting law involves the whole series of evaluative decisions, which at the moment the current level of autonomous technology is not capable of undertaking.”

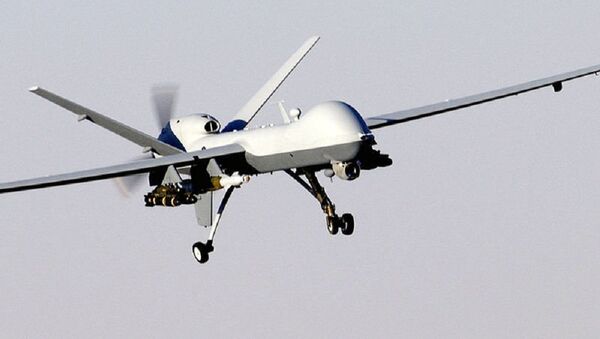

LAWS, or “killer robots” are defined as weapon systems designed and built to select and fire upon targets without human intervention.

Steve Goose, Director of the Arms Division at the Human Rights Watch (HRW) and Co-founder of the Campaign to Stop Killer Robots, told Sputnik on Wednesday that LAWS are unlikely to comply with the basic requirements of international humanitarian law or international human rights law, which demand human judgment in making combat attack decisions.