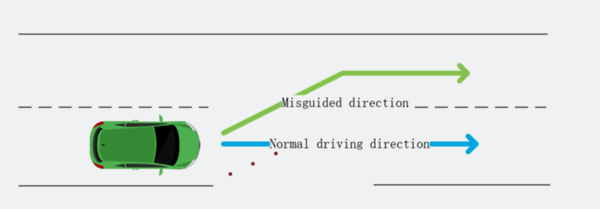

According to the report, the researchers created a "fake line" by placing three tiny stickers on a road.

"We pasted some small stickers as interference patches on the ground in an intersection," the researchers wrote in the report.

The professional hackers found that Tesla's autopilot lane-recognition technology, which relies on ultrasonic sensors and cameras to make sense of the car's surroundings, viewed the inconspicuous stickers on the road as a new lane, fooling the car into mistakenly changing lanes and thus causing it to turn into oncoming traffic.

"Tesla autopilot module's lane recognition function has a good robustness in an ordinary external environment (no strong light, rain, snow, sand and dust interference), but it still doesn't handle the situation correctly in our test scenario," the researchers explained.

"This kind of attack is simple to deploy, and the materials are easy to obtain. As we talked in the previous introduction of Tesla's lane recognition function, Tesla uses a pure computer vision solution for lane recognition, and we found in this attack experiment that the vehicle driving decision is only based on computer vision lane recognition results. Our experiments proved that this architecture has security risks and reverse lane recognition is one of the necessary functions for autonomous driving in non-closed roads," the report adds.

In 2014, Tesla launched its Bug Bounty program to encourage security researchers to discover vulnerabilities in the car's technologies and programs. The rewards generally range from $25 to $15,000.

"We developed our bug-bounty program in 2014 in order to engage with the most talented members of the security research community, with the goal of soliciting this exact type of feedback," Tesla said in a statement following the release of Keen Lab's report. It characterized the vulnerabilities uncovered in the lab's report as not reflecting a "realistic concern given that a driver can easily override Autopilot at any time by using the steering wheel or brakes."

The researchers also showed how Tesla's automatic windshield wipers were erroneously activated by simply placing a television set in front of the car. In Tesla's response, the company noted that the automatic windshield wiper system can be switched off at any time to give the driver manual autonomy of the wipers.