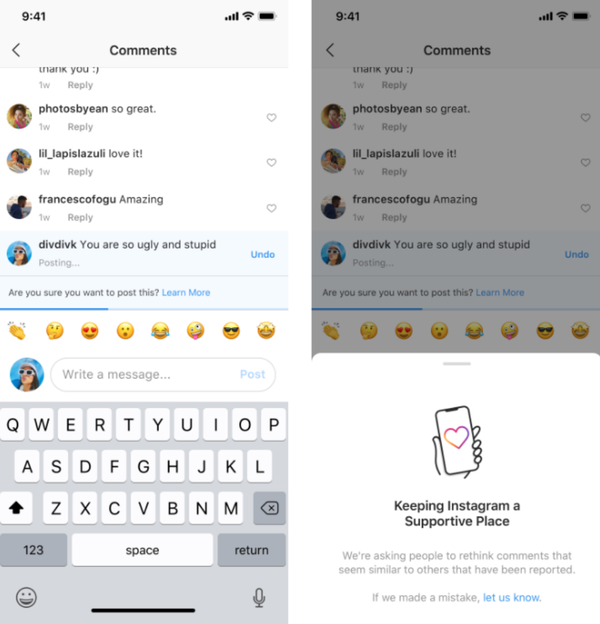

In a Monday blog post, Adam Mosseri, the head of the social media platform, announced that the company had started “rolling out” a new, artificial intelligence-powered feature that would automatically alert users to potentially offensive comments they’ve written before they’re published.

“This intervention gives people a chance to reflect and undo their comment and prevents the recipient from receiving the harmful comment notification,” Mosseri wrote. “From early tests of this feature, we have found that it encourages some people to undo their comment and share something less hurtful once they have had a chance to reflect.”

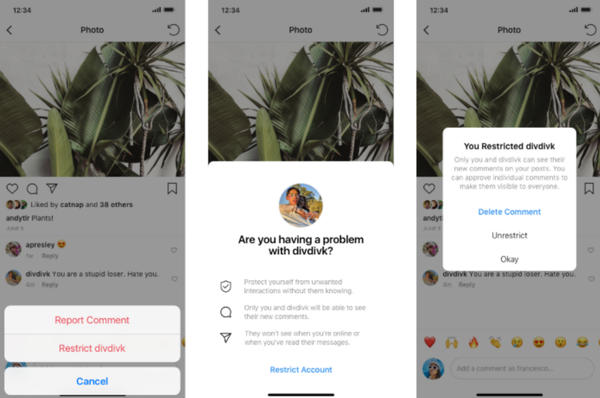

And then there’s the planned feature known as “Restrict,” which has yet to even begin its testing phase. Similar to shadowbanning, the Restrict feature allows Instagrammers to block online trolls without the recipients ever being aware of it.

“We wanted to create a feature that allows people to control their Instagram experience, without notifying someone who may be targeting them,” Mosseri stated, before offering some details on the new tool.

“Once you Restrict someone, comments on your posts from that person will only be visible to that person. You can choose to make a restricted person’s comments visible to others by approving their comments. Restricted people won’t be able to see when you’re active on Instagram or when you’ve read their direct messages.”

“It’s our responsibility to create a safe environment on Instagram. This has been an important priority for us for some time, and we are continuing to invest in better understanding and tackling this problem. I look forward to sharing more updates soon,” he concluded the post.

This isn’t the first time that Instagram has tried to tackle its cyberbullying issues. According to Vox, the Facebook-owned platform previously deployed a comment and emoji filter in 2016 before launching an automated “offensive comment” filter the following year. A filter that blocks “bullying comments” was added to the platform in 2018.