A recent article on Global Voices claimed that an "internet research" analysis using the NodeXL tool found a "staggering" network of 2,900 "Kremlin bots." However, an analysis of Russian-language bots on Twitter as well as the infrastructure shows that this is most likely not the case.

Recently, hype over a series of "Kremlin trolls" stories was apparently used to justify the US government international broadcaster's 2016 budget, which includes $15.4 million for "Countering a Revanchist Russia" in social networks.

The biggest problem with "research" was that the authors had no real idea of how such Twitter botnets actually work. More significantly, it failed to take into account that bot creators and their users tend to be different people and that posts made by bots have little relation to their actual goals and have more to do with avoiding detection.

It also showed the problem with using "internet research" as evidence by people who are not familiar with the environment they are researching.

We looked at several "political" bot trends with Aleksandr Zimarin, a social media consultant to a Russian State Duma Deputy and a Federation Council Senator. Zimarin is also the head of the "Open Internet" marketing agency and director of the marketing department for the venture capital fund ItRuStore.

Case 1: Who is Talking About Nemtsov?

The first example given in the article is a series of tweets claiming that "Ukrainians killed Nemtsov out of jealousy." Unfortunately, the bots which made the posts were deleted and could not be used for analysis.

Is this an attempt to create noise [to shut down an opinion] or promote a post?

Yes, it could be that, but it's unlikely. The reason is different but it's practically impossible to determine it. Yes because in theory, monotonous messages could be used to promote a topic, for example if someone reads the bots, but here it is not the goal. It could be an attempt to make a single word trend, for example "Nemtsov," but then the message would be less provocative.

If we assume that these tweets are the result of someone's attempt to create noise, then this person is either a newbie or the program they use to control the botnet had a glitch and the text went differently from the way it was supposed to.

This is not so that you don't find out that it's a bot, but so that Twitter doesn't find out that it's a bot.

Here's where weird tweets come from in general: botnets need to be "pumped through" with something. More competent "botovods" [botnet masters] use programs which use an algorithm in such a way that can't be noticed, so every bot makes a unique tweet. This is not so that you don't find out that it's a bot, but so that Twitter doesn't find out that it's a bot. Botnet masters who are not very experienced use tweets to create activity. This looks like a case of that.

Case 2: "Putin doesn't understand that he won't win the battle this way?"

A second example, which ties in with the first is a tweet that promotes a point of view. It is likely closer to the tweets which claimed that "Nemtsov was killed by Ukrainians."

Is this just a copy of a comment from a social network or an attempt to promote an opinion?

It looks like the algorithm simply pulled a comment from somewhere and is posting it. It's possible that for comments, the botnet master uses keywords and semantics to find the comment's tone, for example to make an "opposition" bot. Again, it's possible that it's a newbie botnet master.

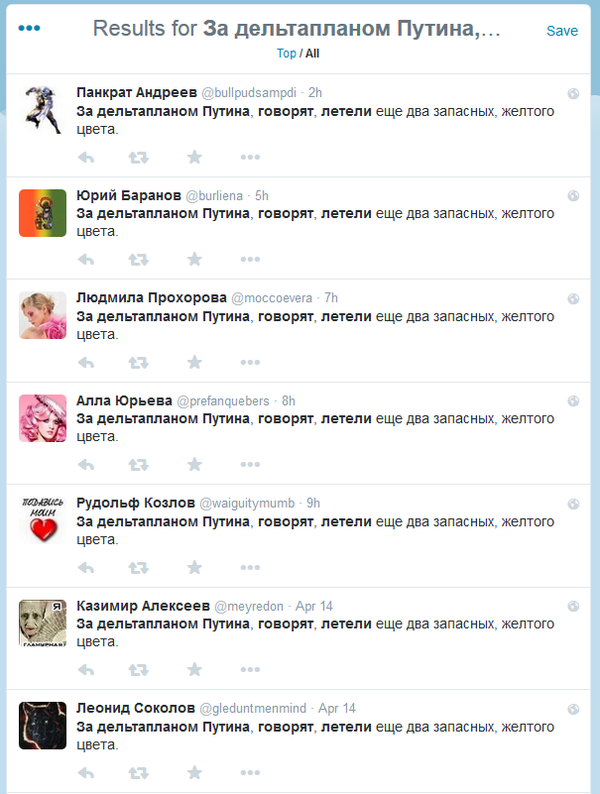

Case 3: Putin Jokes From 2012

In 2012, Russian President Vladimir Putin flew on a motorized hang-glider to guide a flock of cranes. The move was seen as a PR blunder and a series of jokes was made about it.

Despite the joke being from 2012, bots continue tweeting it at an average of three to five times a day.

Is this a phrase inserted into the database a long time ago?

Yes, it looks like an abandoned botnet which continues a posting algorithm. That is, the bots are no longer used, but continue posting because they are not turned off.

Where the Bots Come From

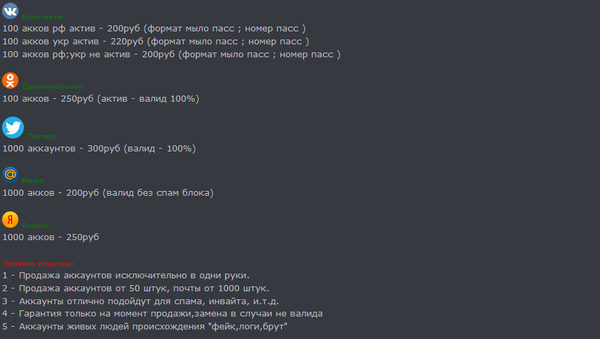

How are these bots created?

There are two main ways. Newregs are accounts created automatically by a program through an algorithm. They can be simpler, empty accounts, or accounts with an avatar, name, biography and several tweets. The difference is the time it takes to do this and therefore the price.

They are usually stolen through websites that allow you to login through Twitter.

Offers are accounts stolen from a user with a name and email change, so that the user can't get it back. They are usually stolen through websites that allow you to login through Twitter.

Is the lack of links or hashtags other than #joke or #humor a form of cover?

This is more complicated, links and hashtags can also be a form of cover. People who control botnets don't have moral bearings and can simultaneously work on two fronts: politicians and promoting a website through SEO and making a commercial hashtag trend. There is no point in trying to figure out the point of a bot's actions by the tweets it makes because other than the above, it makes tweets for cover which are written with very unusual algorithms.

Why are they created?

The main clients of social network bots are spam mailing lists, SEO promotions, SMM reports (measures such as "our hashtag trended, 2000 Twitter users took part in the contest"), and only then politics. The time when a botnet could be used for political struggle has passed.

Almost all of these accounts were created in March 2013, does this mean that they have one creator?

Yes, it could mean that. It is rare that the bot creator and the person using it is the same person. Usually everything is a bit different. For example, a programmer found a vulnerability in a service (not necessarily Twitter itself, but for example Mail.Ru, which allowed the to create one million email addresses) and made an enormous bot database.

There are known cases of databases of 20 million or 5 million bots being sold to one buyer.

Generally, these are bots of the lowest quality. From there on, they are resold in smaller batches, possibly made better in quality and so on. It's the same with stolen accounts, except they don't improve the database after stealing it because it's perfect.