Dr. Robert Epstein — I recently attended an all-day workshop on fake news at one of the top law schools in the US. By the end of the day, this roomful of impressive experts, among them representatives from Google, Microsoft, the New York Times, and Buzzfeed, couldn't even agree on a definition of "fake news." Worse still, every suggestion for controlling or regulating this indefinable kind of news was immediately shot down.

So what should we think about new efforts by Google and Facebook to use algorithms to protect the public from fake news?

In my view, we should be both unimpressed and frightened.

We should be unimpressed because fake news isn't really the problem most people think it is. Although the fake news stories about Hillary Clinton that proliferated rapidly on the internet last November probably cost her many votes, fake news is not, generally speaking, a serious threat to democracy for two reasons.

Fake News is Like Billboards

First, fake news is competitive. It is exactly like billboards and TV commercials – many of which also contain false claims. For every fake news story you post about me, I can post two about you. I can also post true stories to try to correct the record or even true stories exposing the fake news stories you have been posting. Sound familiar? Mud-slinging is endemic to politics and always will be, and no algorithm will ever stop it.

The speed of proliferation is not in and of itself a problem. We mistakenly have come to believe that the rapid proliferation of ideas or information is a new phenomenon, made possible only in recent years because of the growth of the internet. We somehow have forgotten that long before the internet was invented, news stories and false rumors often spread through populations at lightning speed: news about the stock market crash of 1929, about V-J Day in 1945, about the assassination of John F. Kennedy in 1963. On March 19, 1935, a baseless rumor about a beaten child quickly spread throughout the Harlem area of New York City, resulting in widespread rioting, multiple deaths, and millions of dollars in property damage. People were gossipy, gullible social beings long before social media platforms were invented.

You Can See Fake News

Second, fake news stories are visible sources of influence. When, through Facebook's newsfeed or Google's search engine, you come across a story claiming that Hillary Clinton is a Martian, you know you are being influenced. You can see the story in front of you, just as you can see a physical newspaper or a billboard or TV commercial. Visible sources of influence impact people quite predictably: people pay attention to information that supports their biases and beliefs, and they ignore or reject the rest.

Fake news stories are troubling; they might even be impacting hundreds of thousands or millions of people every day. But let's put this into perspective: bias in search results and search suggestions is likely affecting billions of people every day without their knowledge. As a means of influence, fake news is relatively trivial in its impact.

The Scary Stuff

Because fake news stories are both visible and competitive, I don't find them very frightening. What does scare me is the idea that rapacious corporations like Google and Facebook are going to decide what fake news is, then decide which news stories meet their criteria, and then either label those stories as suspect or, in the extreme case, make those stories disappear.

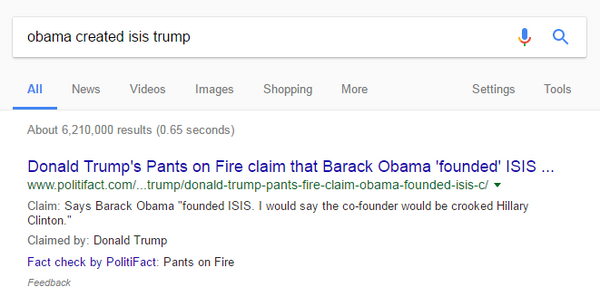

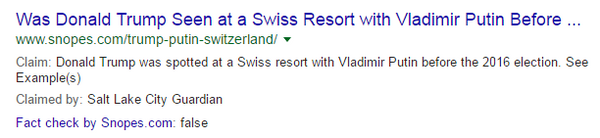

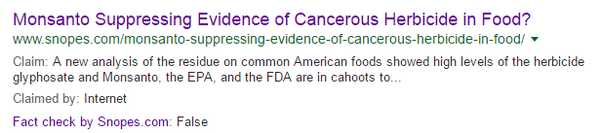

As I learned at that law school conference, it is unlikely that any group of reasonable people is ever going to agree on a definition of fake news, so I'm not going to bother offering one here. But to get a sense of how difficult this problem is, please consider the following questions:

- Is a news story fake just because it gets some things wrong? How much does it need to get wrong for us to call it "fake"? Twenty percent of the fact it reports? Fifty percent? Isn't the accurate part of the story still accurate?

- Is an accurate story released by a bogus news site – say, a website that pretends to be part of a news organization that doesn't exist – legitimate or fake? Remember, this particular story is accurate; it's just the news organization that's fake. What should we do: run the story or bury it?

- Is an accurate story released by a news site (like Sputnik) that is associated with an adversarial foreign government (like Russia) – legitimate or fake? I ask this in part because the last time I published an article in Sputnik, mainstream American news organizations immediately brushed my article aside, even though I was accurately reporting on new research I had been conducting on Google's autocomplete.

- Is a news story fake just because it is slanted toward one political perspective? If so, couldn't many stories released by both Fox News and The New York Times be considered fake? Wouldn't all stories released by Breitbart automatically be suppressed?

- Do satires qualify as fake news stories? Is, for example, the story I published last year about Google donating its search engine to the American public fake news? Should it be expunged? How could an algorithm distinguish fake news from satire when most people cannot?

Whether you use people or an algorithm (which is just a set of rules written by people) to try to answer such questions, you turn a problem that people can generally get their head around – the proliferation of fake news stories – into a nightmarish set of problems that make the head spin.

The false positive problem. If you or your algorithm correctly identify and quash a fake news story (and I say that lightly, because we can't even define fake news), this is called a "true positive" – the correct identification of what you're looking for. But what if you mistakenly identify a valid news story as fake? This is called a "false positive," and from a public policy perspective, it is a disaster, just like a false positive in a test for cancer. You have now told the public – the whole world, maybe – that a valid news story is invalid; perhaps you even deleted the story from feeds so that virtually no one in the world can see it.

Having been a programmer most of my life, I can guarantee you that any algorithmic system meant to quash fake news will inevitably produce false positives. When do the dangers of obliterating real news stories outweigh the dangers of allowing fake news stories to exist?

The only good news here is that if these companies proceed with aggressive programs to suppress fake news stories, they will move closer toward the crosshairs of regulators. Until now, companies like Google and Facebook have claimed protection under section 230 of the US Communication Decency Act (CDA 230), which states that "No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider" (47 U.S.C. § 230). In other words, Google and Facebook aren't responsible for anything they show you because they don't originate the content they show you; they're just middlemen, not "publishers."

But the more obviously these companies act like publishers – picking and choosing which news stories are valid – the less protection they will have under CDA 230. As I noted in "The New Censorship," Google is now the world's biggest censor; acting as a Super Editor to make decisions about news stories will make its role as censor more obvious to regulators, legislators and judges.

The technological tug-of-war. More than a decade ago, Google laid down the law and said, more or less, "How dare you try to trick our search algorithm into listing your crappy website higher in our search rankings? We will crush you like a bug." Did SEO (Search Engine Optimization) experts – the people whose job it is to push your business higher in search rankings – respond by lying down and dying? Nope. In fact, by early 2016, the SEO industry was doing an estimated $65 billion a year in business. Gaming Google is very profitable, it seems. Google keeps adjusting its search algorithm to protect itself from being gamed, and the gamers respond with better algorithms to game it.

I mention this because the same thing will happen with algorithms that try to suppress fake news stories. People who want to spread such stories will simply program around the algorithms.

Fake news is troublesome, for sure – always has been and always will be. But allowing big, unregulated technology companies to manage fake news – in other words, to manage all our news – is potentially far more harmful than fake news itself.

___________________

EPSTEIN (@DrREpstein) is Senior Research Psychologist at the American Institute for Behavioral Research and Technology in Vista, California. A PhD of Harvard University, Epstein has published fifteen books on artificial intelligence and other topics. He is also the former editor-in-chief of Psychology Today.

The views expressed in this article are solely those of the author and do not necessarily reflect the official position of Sputnik.