The idea of man-made artificial intelligence is something that has fascinated and puzzled scientists, engineers and philosophers since the middle of the 20th century.

Today, amid news that China and the United States are engaged in a race for AI superiority, and major progress by companies such as Google in making AI a reality, philosophical debates continue. This week, researchers gathered in Long Beach, California for the Neural Information Processing Systems Conference to discuss the possible dangers relating to the growing power of AI, and how to imbue it with an 'ethical conscience.'

Those subscribing to the first view fear the unknown, and what thoughts may appear in the AI's virtual mind. Scientists, meanwhile, for the most part, are more prone to supporting the latter.

First things first, it's necessary to define AI. A device or program simply capable of performing calculations faster than a human being does not qualify. A program that's not truly capable of independent learning cannot be called artificial intelligence. At the same time, the program's material shell, i.e. the device in which its 'virtual brain' is embedded, does not matter, and can range from everything from a portable device, to a quantum computer, to a robot, or an airplane. The 'intelligence' of the device and its program is determined by the presence or absence of a learning algorithm.

As for the AI's intentions, most scientists engaged in the creation of AI solutions do not share the pessimists' view that machines capable of thought will seek to enslave or exterminate humanity the moment they become conscious.

"Hollywood movies like The Terminator or The Matrix are quite divorced from reality," Samsonovich said, speaking to Russia's RIA Novosti news agency. "At the moment, computers are so dependent on human beings that, even if they were capable of destroying us, they would not be able to exist for long afterward. Of course, it's possible that in the future AI will learn how to extract minerals on its own, build factories and provide itself with energy, but I don't think this will happen within this century."

In any case, the professor stressed that forecasting for advances in any particular field of science "is a thankless task, since too much depends on financing."

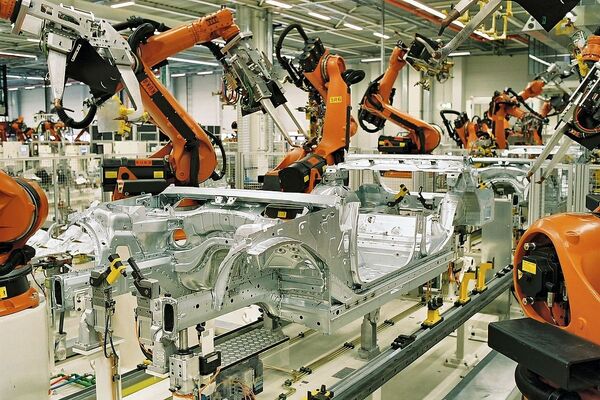

Some observers, quite reasonably, predict that the creation of AI risks leaving people out of a job. Many professions, in areas like navigation and vehicle assembly, have already been replaced by computer programs and robots. But practice has also shown that the mechanization of labor also results in the creation of new professions in which human beings' intellectual and creative capabilities become more valuable.

Institute of Intelligent Cybernetic Systems' deputy director Valentin Klimov emphasized that thinking computer systems have the potential to help save lives, not destroy them.

"The AI system we are working on will be able to predict when a set of factors will lead to a breakdown of a particular major component of a [nuclear] power station," Klimov said. "The fact is, different parts of large mechanisms rarely fail alone. Most often, accidents result from simultaneous breakdowns, caused, for example, by multiple worn-out components. A human being alone simply cannot calculate and analyze such a large amount of data to understand where a malfunction will arise and what will cause it."

Of course, the fate of humanity aside, the accelerated pace of the development of machine intelligence also gives rise to other questions, including ethical ones. For example, should AI be considered an individual? What rights and responsibilities will it be imbued with? With functional AI seemingly just around the corner, the answer to these questions will be answered, in one way or another.